Locations

This is the fourth instalment in our series of insights on the Digital Services Act (DSA) – this covers the obligations on intermediary service providers under the DSA. We will be hosting a webinar on these issues on Wednesday 19 May – sign up here.

For more information on the DSA, please see our previous posts on:

Obligations under the DSA

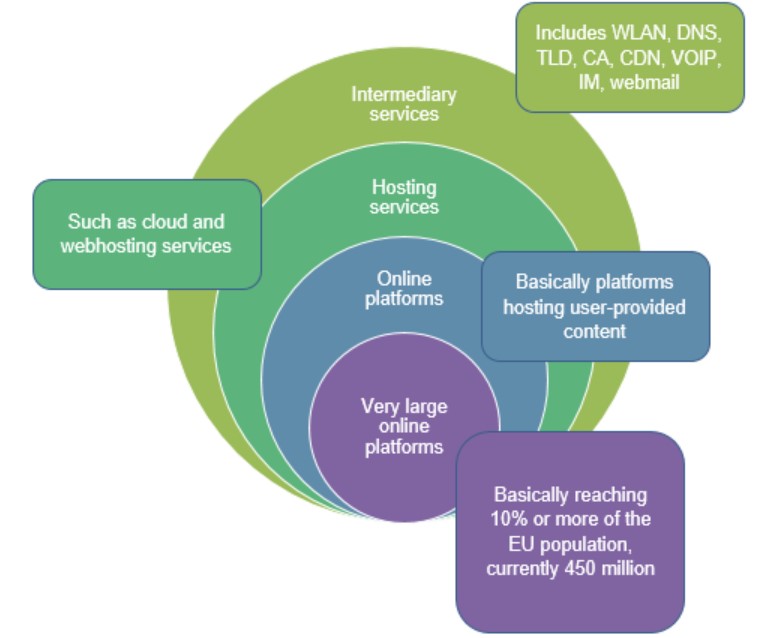

As noted in our previous posts, the DSA recognises that the size of different "intermediary services" and the scope of their user base warrants different obligations and responsibilities. Therefore the proposed wording of the DSA seeks to match the obligations and responsibility of intermediaries to the role, size and impact of the organisation in the market – naturally these become more onerous for the larger players. Importantly, these obligations are cumulative. This avoids onerous obligations being placed on much smaller players – in fact some provisions are explicitly excluded for small/micro enterprises.

As a reminder from our previous blog post, the categories of intermediary services include (1) providers of hosting services, including online platforms, (2) "online platforms" only (excluding other types of "hosting services"), and (3) "very large online platforms". The last category is defined as those online platforms that provide services to 45 million or more active users in the EEA, on a monthly average basis. The most extensive requirements proposed by the Commission would apply to those businesses. The diagram below summarises the different types of intermediary services.

Obligations that apply to all intermediary services

There are four basic obligations that apply to all intermediary service providers:

- EU legal representative: due to the extra-territorial scope of the DSA, organisations who do not have an "establishment" in the EU must appoint an EU representative. The DSA representative will act as a point of contact, but can also be held legally liable for the provider's non-compliance with the DSA (in contrast, the liability of a GDPR representative is unclear). Under the DSA "one stop shop", a provider will be under the jurisdiction of the Member State where its DSA representative is established; if it fails to appoint a DSA representative, it will be subject to the jurisdiction of every Member State, which should incentivise non-EU providers to appoint representatives. The details of the representative should be notified to the Digital Services Coordinator ("DSC") in the Member State where the representative is established.

- Single contact point: made publically available for authorities and the Commission to communicate with, and language for communications (must be an official EU language, including a language of the Member State where the service provider has its "main establishment" or legal representative).

- Transparency: publish reports (at least annually) on any content moderation activities during the reporting period, containing certain minimum information. (Exemption for small/micro enterprises.)

- Terms and conditions: to include information on any restrictions on user-provided information, including details of any policies, procedures and tools used for content moderation, including algorithmic decision-making and human review. Additionally, providers are obliged to enforce these restrictions in a diligent, objective and proportionate manner.

Additional obligations

Hosting services

In addition, hosting platforms must implement accessible, user-friendly mechanisms to allow users to notify the provider of illegal content. On receipt of such a notice, the provider is obligated to process it in a timely, diligent and objective manner (automated processing of notices is permitted). A very important point is that notices containing certain information, e.g. the notice-giver's name/email address and why they consider particular content to be illegal, are deemed to give rise to actual knowledge or awareness on the part of the provider, regardless of the information's accuracy or validity. This means the provider automatically loses its liability defence or shield regarding that content. Therefore, this provision incentivises providers to take down or disable access to the relevant content immediately, for their own protection, which has serious implications for content uploaders' freedom of expression.

If the provider decides to remove or disable access to potentially illegal content, the provider must provide the affected users with a statement of reasons meeting certain minimum requirements. Interestingly, hosting providers must publish their decisions and statements of reasons in a public database managed by the Commission but without personal data, so providers will be faced with a practical redaction issue.

Online platforms

On top of the obligations above, online platforms (again except for small/micro enterprises) are required to:

- Implement a free electronic complaint and redress systems for users meeting certain requirements.

- Engage in good faith with and be bound by any decisions made by out of court dispute settlement bodies certified by the DSC, and are liable to reimburse costs if they lose.

- Implement technical and organisational measures to prioritise notices from "trusted flaggers" meeting certain conditions, who are approved as such by the DSC. Approved trusted flaggers will be listed on a public Commission-maintained database).

- Put in place measures to suspend, for a reasonable period of time and after having issued a prior warning, services to users who frequently provide manifestly illegal content, and the processing of notices from those who frequently submit manifestly unfounded notices or complaints. Their terms must set out their policy regarding such misuse, including the facts and circumstances they take into account when assessing misuse, and the duration of the suspension.

- Notify authorities if they become aware of any information giving rise to a suspicion that any serious criminal offence involving a threat to life or safety has taken place, is taking place or is likely to take place. Note the low threshold of "a suspicion", cf. "reasonable suspicion".

- If the online platform allows consumers to conclude distance contracts with traders, vet traders against certain minimum criteria (identity etc.) and provide a subset of that information to users. Platforms must suspend traders who fail to provide complete and accurate information.

- Provide additional information in their published transparency reports, including number of disputes, settlement outcomes and any automated moderation activities; and a 6 monthly update on average monthly active users in each Member State.

- For any advertising displayed on the platform, name the advertiser and provide meaningful information on the parameters used to determine to whom an ad is displayed.

Very large online platforms

As noted above these organisations are subject to the most onerous obligations under the DSA. This includes:

- Carrying out risk assessments of their services in the EU at least once a year, regarding any significant systemic risks stemming from the functioning and use made of those services. These assessments must takes into account illegal content on those services, any negative effects on the fundamental rights of privacy, freedom of expression, non-discrimination and children's rights, and any intentional manipulation of the service (including automated) with certain negative societal effects.

- Implementing reasonable, proportionate and effective measures to mitigate those risks.

- Conducting at least yearly independent audits (at their own cost) to assess compliance with the due diligence obligations and certain other obligations under the DSA. Such platforms must, within one month after receiving recommendations made in any negative audit report, adopt an audit implementation report setting out measures to address those recommendations.

- If using recommender systems, their terms must set out the main parameters used and any option for users to modify or influence those parameters (including at least one option not based on profiling).

- Giving access to data to the DSC/Commission on request to monitor the platform's compliance with the DSA

- Giving access to data to vetted researchers carrying out research to identify and understand the above systemic risks.

- Appointment of one or more compliance officer(s) to monitor the platform's compliance with the DSA, with certain criteria, tasks and requirements.

- Additional transparency around advertising – provide API access to a repository containing, for at least one year from the date an ad was displayed, certain information about every ad displayed to users (redacting any personal data of users to whom ads were displayed).

- Provide additional transparency information: publish the reports mentioned above more frequently (at least every 6 months), and publish and transmit to the DSC and the Commission a least once a year (and within 30 days of any audit implementation report): the risk assessment, risk mitigation measures, audit report and audit implementation report. The platform may redact, from the published version, information that may result in disclosure of confidential information of the platform or its users, may cause significant vulnerabilities for the service's security, may undermine public security or may harm users.

The following table summarises the obligations of the different types of intermediary service providers:

| All intermediary service providers | Hosting services | Online platforms | Very large online platforms | |

| Transparency reporting | ✔ | ✔ | ✔ Additional reporting obligations |

✔ Online platforms' plus further reporting obligations |

| Point of contact & language and, if applicable, EU legal representative | ✔ | ✔ | ✔ | ✔ |

| Terms - information on user content restrictions | ✔ | ✔ | ✔ | ✔ |

| Notice and action mechanisms for illegal content | ✔ | ✔ | ✔ | |

| Complaints system and out of court dispute settlement | ✔ | ✔ | ||

| Prioritisation of notices from "trusted flaggers" | ✔ | ✔ | ||

| Suspension of frequent illegal content and frequent unfounded notices | ✔ | ✔ | ||

| Notify suspicion of serious criminal activity | ✔ | ✔ | ||

| Trader information and vetting | ✔ (if the online platform allows consumers to conclude distance contracts with traders) | ✔ (if the online platform allows consumers to conclude distance contracts with traders) | ||

| Advertising transparency for users | ✔ | ✔Additional transparency required – repository and API | ||

| Risk assessment and mitigation reports | ✔ | |||

| Independent audits | ✔ | |||

| Transparency of recommender systems and user choice | ✔ | |||

| Access to data for authorities and researchers | ✔ | |||

| Compliance officers | ✔ |

Depending on where your organisation falls in the matrix above, there may be quite extensive obligations to comply with. As will be seen in our next blog post on the DSA, the enforcement actions proposed for non-compliance are expected to be severe, including potentially fines with ceilings exceeding those under GDPR.

Although the DSA is likely to be amended during its legislative passage, we recommend that organisations falling within the scope of the DSA should start to work out where in the matrix their various services offered in the EU would fall, the potential obligations they may be subject to, and the practical actions they may need to take, both as regards the updating of their terms and conditions and as regards changes that may be required to their policies and processes for compliance, e.g. (where relevant) implementing complaints and dispute resolution systems, and recruiting compliance officers. Lobbying efforts on the DSA package are likely to be ongoing for some time, from both industry bodies and civil society organisations.

This article was written by Director, Kuan Hon and solicitor, Charley Guile.